OpenVINO™ Workflow Consolidation Tool Tutorial

About

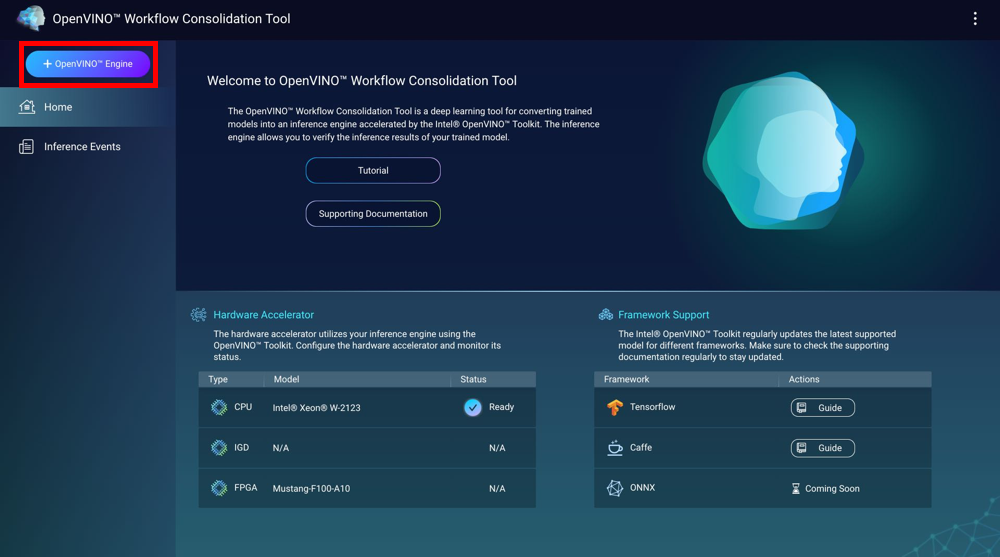

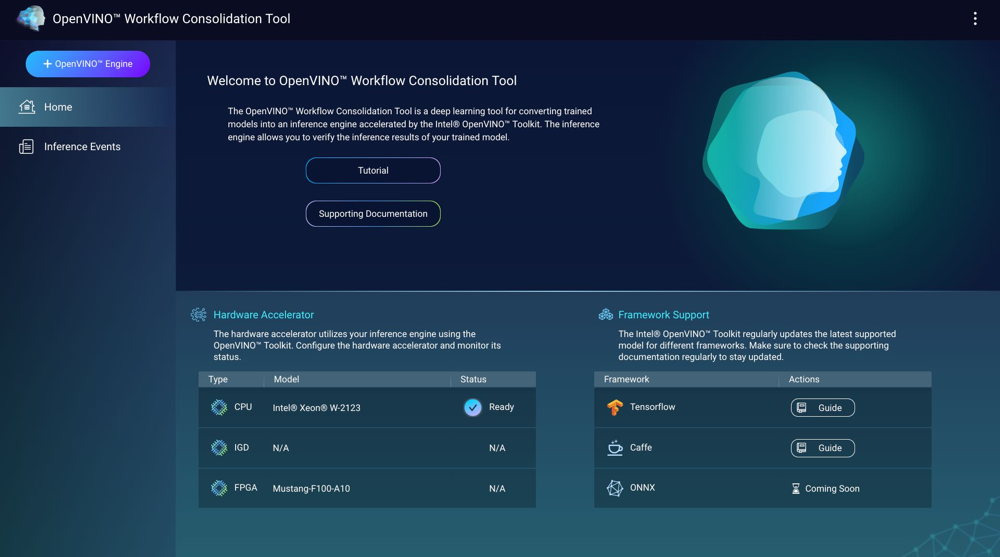

The OpenVINO™ Workflow Consolidation Tool (OWCT) is a deep learning tool for converting trained models into inference engines accelerated by the Intel® Distribution of OpenVINO™ toolkit. Inference engines allow you to verify the inference results of trained models.

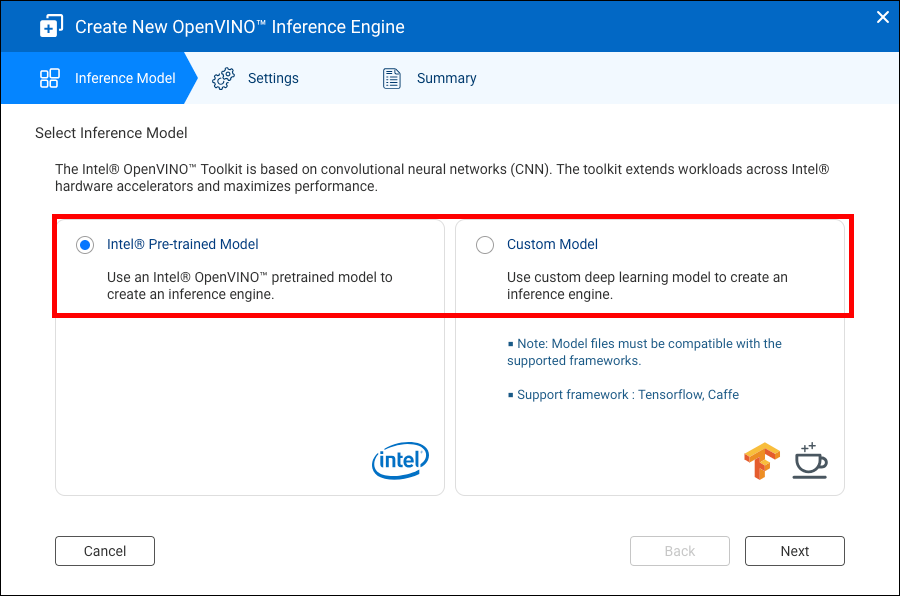

The Intel® Distribution of OpenVINO™ toolkit is based on convolutional neural networks (CNN). The toolkit extends workloads across Intel® hardware accelerators to maximize performance.

Container Station must be installed in order to use the OWCT.

- Compatibility

- Hardware Accelerators

- Creating Inference Engines

- Using Computer Vision With Inference Engines

- Managing Inference Engines

Compatibility

|

Platform |

Support |

|---|---|

|

NAS models |

Note:

Only Intel-based NAS models support the OpenVINO™ Workflow Consolidation Tool. |

|

OS |

QTS 4.4 |

|

Intel® Distribution of OpenVINO™ toolkit version |

2018R5 For details, go to https://software.intel.com/en-us/articles/OpenVINO-RelNotes. |

Hardware Accelerators

You can use hardware accelerators installed in your NAS to improve the performance of your inference engines.

Hardware accelerators installed in the NAS are displayed on the Home screen.

Hardware accelerators with the status Ready can be used when creating inference engines.

If the status displayed is Settings, go to to set up the hardware accelerator.

-

To use field-programmable gate array (FPGA) cards on a QNAP NAS, virtual machine (VM) pass-through must be disabled.

-

Each FPGA resource can create one inference engine.

- Each vision processing unit (VPU) resource can create one inference engine.

Creating Inference Engines

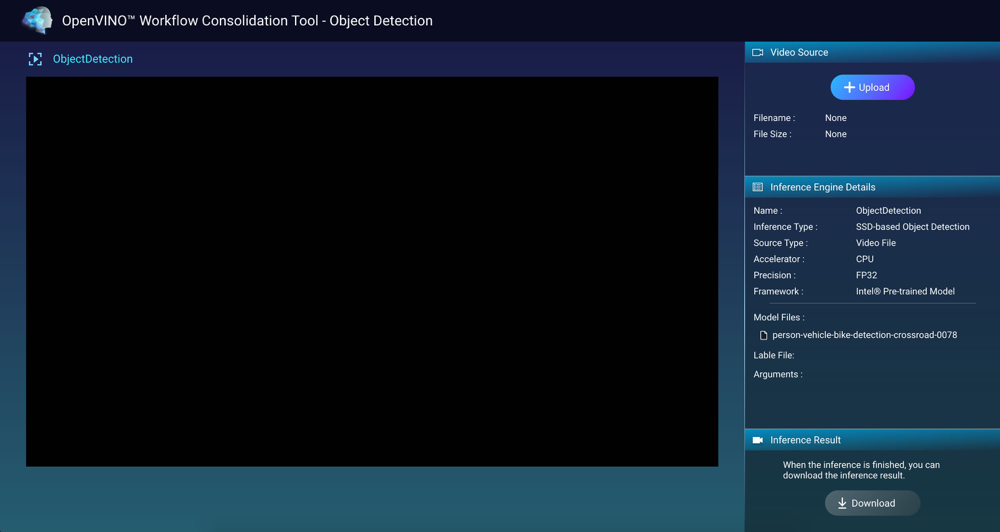

Use the OWCT to create inference engines and configure inference parameters.

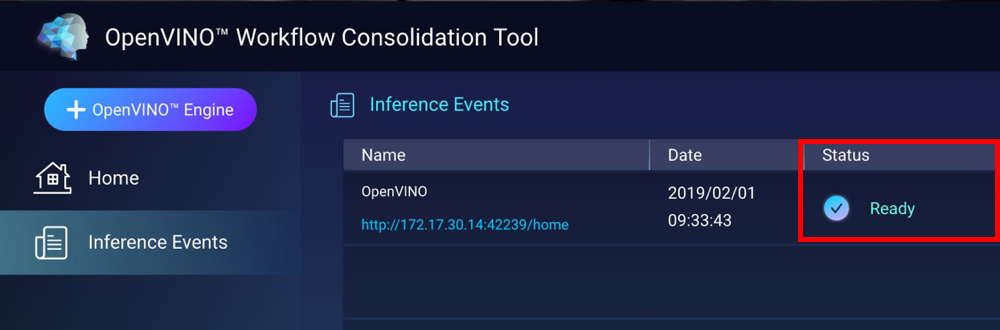

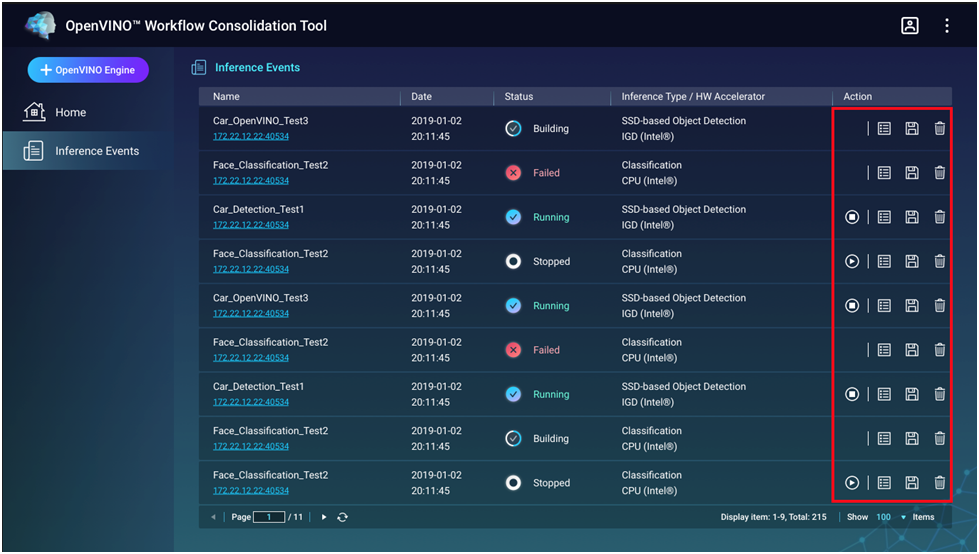

The OWCT creates the inference engine and displays in on the Inference Events screen.

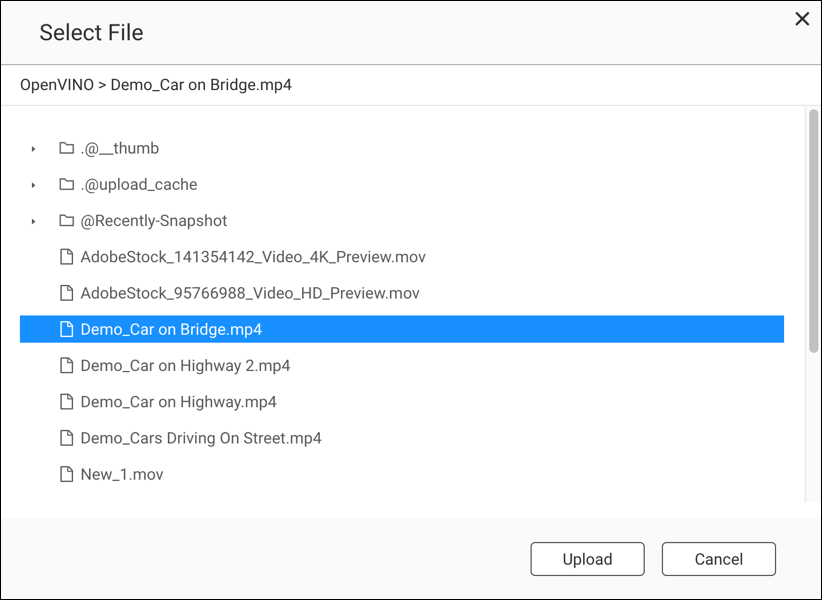

Using Computer Vision with Inference Engines

Managing Inference Engines

You can view and manage inference engines on the Inference Events screen.

|

Button |

Description |

|---|---|

|

|

Starts the inference process. |

|

|

Stops the inference process. |

|

|

Displays details and the log status of the inference engine. |

|

|

Save the inference engine as an Intermediate Representation (IR) file for advanced applications. |

|

|

Deletes the inference engine. |