How to use large language models for Qsirch RAG search?

Applicable Products

Qsirch 6.0.0 (or later) on all platforms

Qsirch RAG Search with Cloud and On-Premise LLMs

Qsirch supports using Retrieval-Augmented Generation (RAG) with cloud-based large language models (LLMs) to deliver accurate and context-aware responses. When you enter a query, Qsirch retrieves relevant documents from your NAS and uses them as context for the language model. This combination of search and generative AI enables Qsirch to generate precise and informed answers based on your own data.

Qsirch also supports multi-turn conversation, enabling natural and context-aware interactions. Users can ask follow-up questions without repeating prior context, and context is preserved across turns for coherent answers. Multi-turn conversation support works with both cloud and on-premise RAG modes.

Integrate RAG with Cloud-based AI Services

To integrate RAG search, you must first obtain an API key from an AI service of your choice.

ChatGPT (OpenAI API)

ChatGPT (OpenAI API) provides powerful GPT models for both RAG embeddings and generative responses. Follow the steps below to apply for an API key:

- Sign up for an account on OpenAI: https://auth.openai.com/create-account

- Create an API key in the account settings.

For more information, see OpenAI API Documentation.

Azure OpenAI

Azure OpenAI provides access to OpenAI models (like GPT-4.1) via Azure infrastructure, which is ideal for enterprise solutions. Follow the steps below to apply for an API key:

- Sign in to the Azure Portal.

- Select your OpenAI resource (or create one if you do not already have one).

- On the left menu, click Keys and Endpoint.

- Copy an API key and copy the endpoint (base URL) for API requests.

For more information, see Azure OpenAI Documentation.

Gemini (Google Cloud AI)

Gemini (Google Cloud AI) provides a set of models designed for high-performance reasoning and RAG. Follow the steps below to apply for an API key:

- Visit the Google Gemini API Documentation.

- Get a Gemini API key in Google AI Studio.

- Sign in to your Google account.

- Click Create API key.

For more information, see Google Cloud AI Documentation.

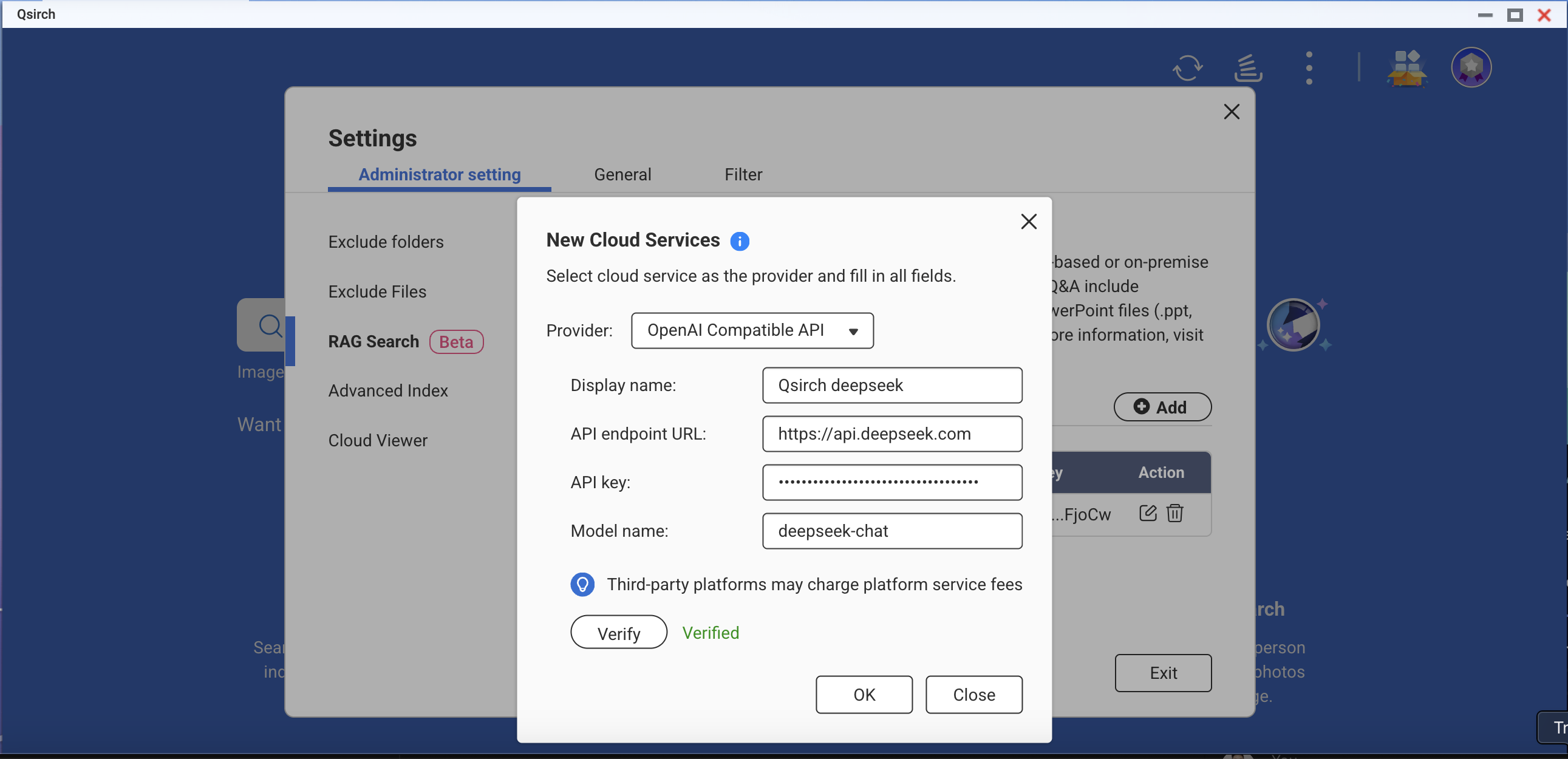

OpenAI-Compatible API

OpenAI Compatible API allows users to access various AI models beyond OpenAI’s own offerings, including models like DeepSeek and Grok, using the same API format. This enables seamless integration with existing applications built for OpenAI API. Follow the steps below to apply for an API key:

- Choose a provider that supports OpenAI-compatible API (for example, DeepSeek or Grok).

- Sign up on the provider's platform.

- Generate an API key in the account settings.

- Update your API endpoint and model settings as required.

For more information, refer to the provider's official API documentation.

On-Premise RAG Search

To enable on-premise RAG:

- Ensure that the LLM Core is ready.

- Set the GPU to Container Station mode (Go to Control Panel > Hardware > Hardware Resources > Resource Use > Container Station mode).

How to Use RAG Search in Qsirch

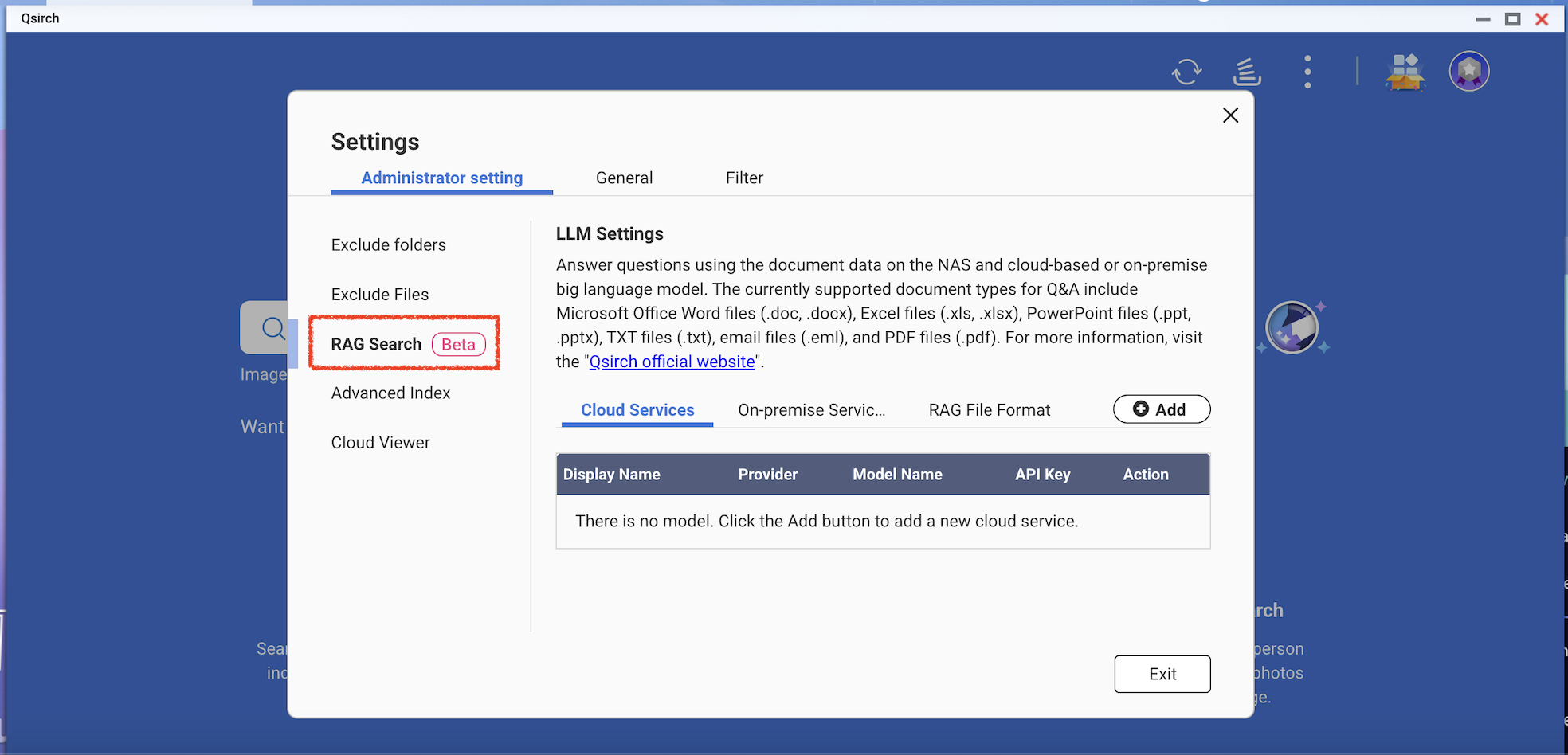

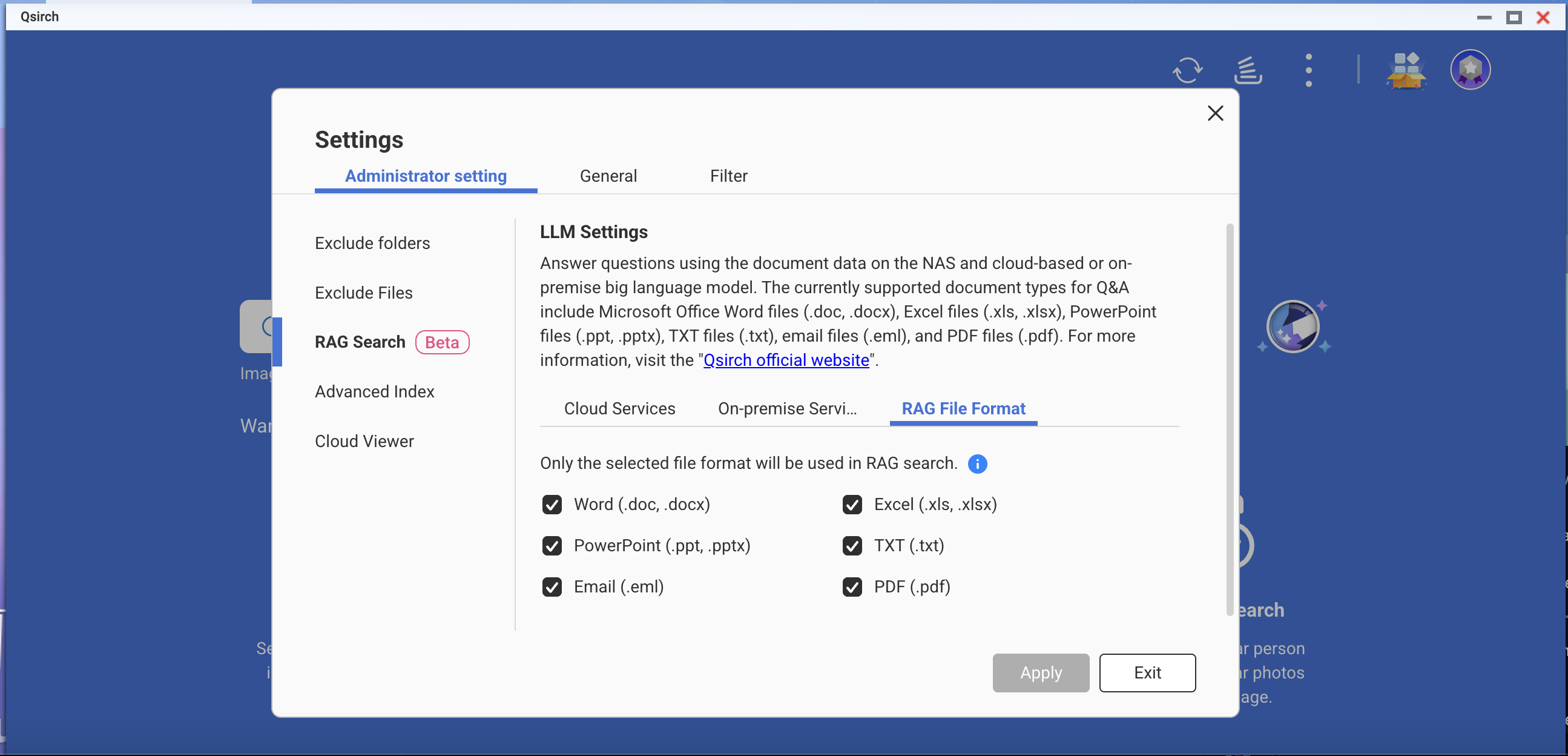

- Go to Settings > Administrative setting > RAG Search.

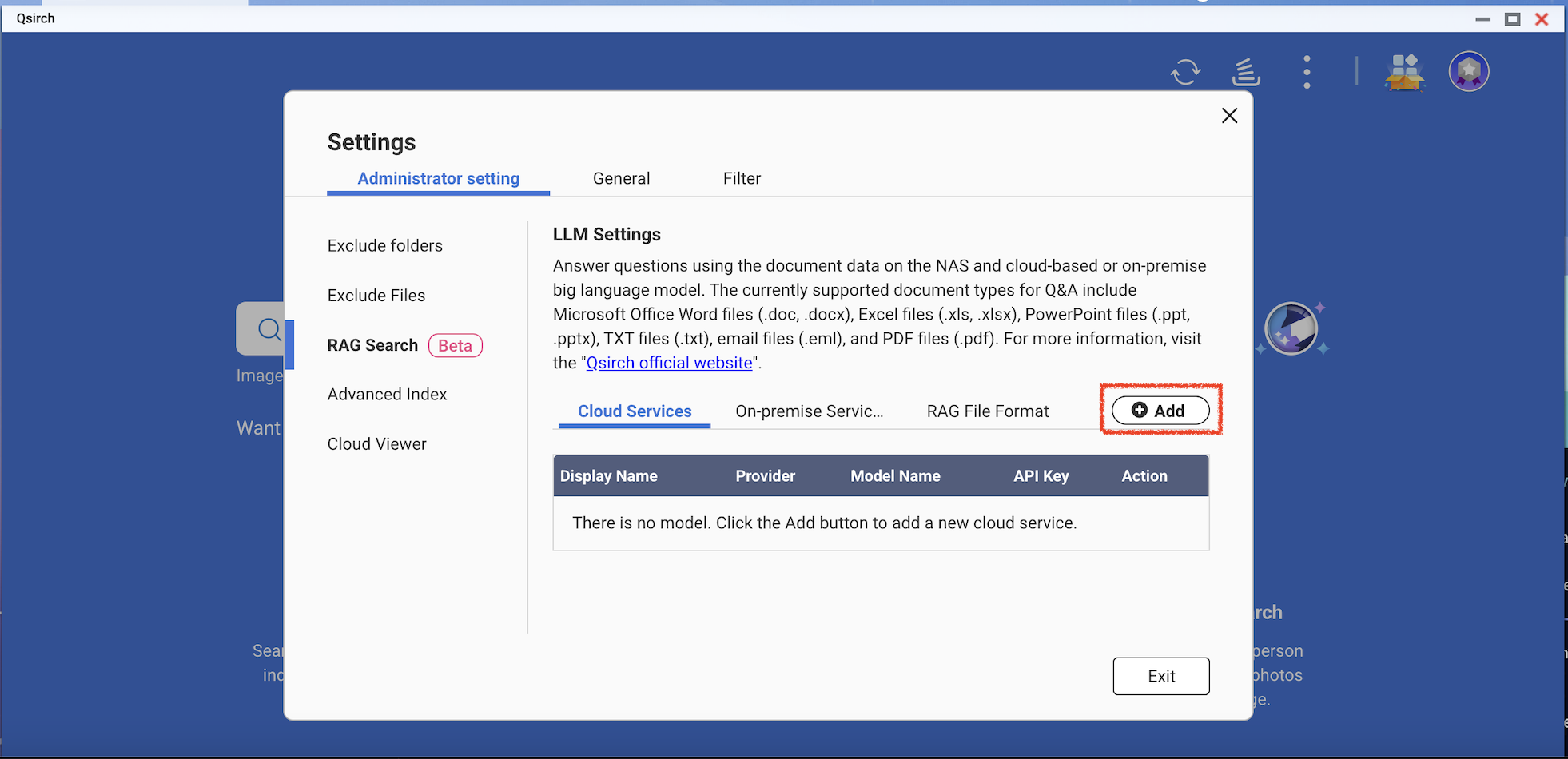

- Add one or more cloud services to use RAG search.

(Optional) Verify your API key. This helps check whether an API key is valid.

Download one or more edge models from the on-premise services. This feature can only be used on the specific NAS models.

- Add more AI models that are compatible with OpenAI API, including the GPT series, DeepSeek models, and Grok models.

- Choose file formats for your RAG search.

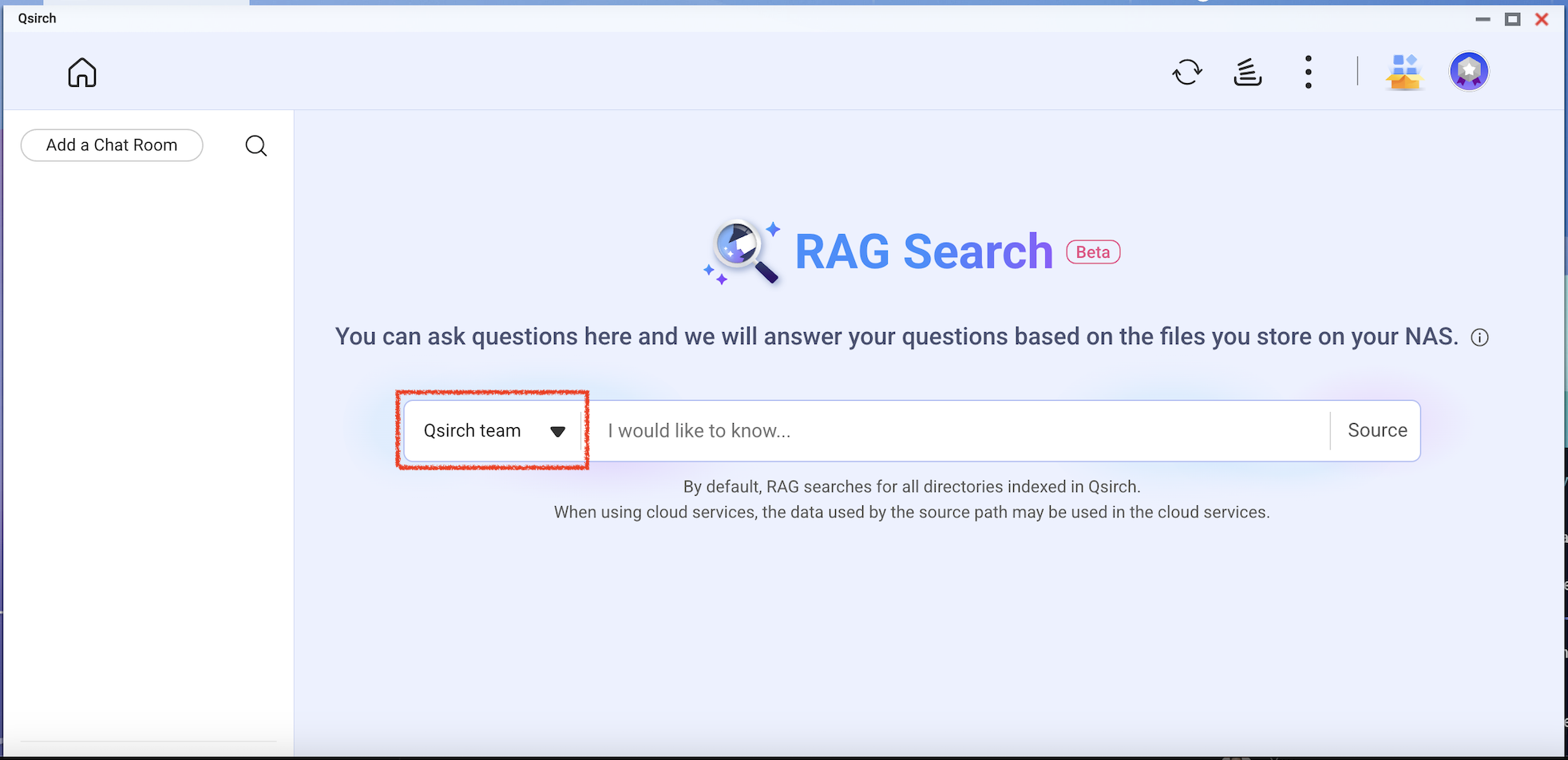

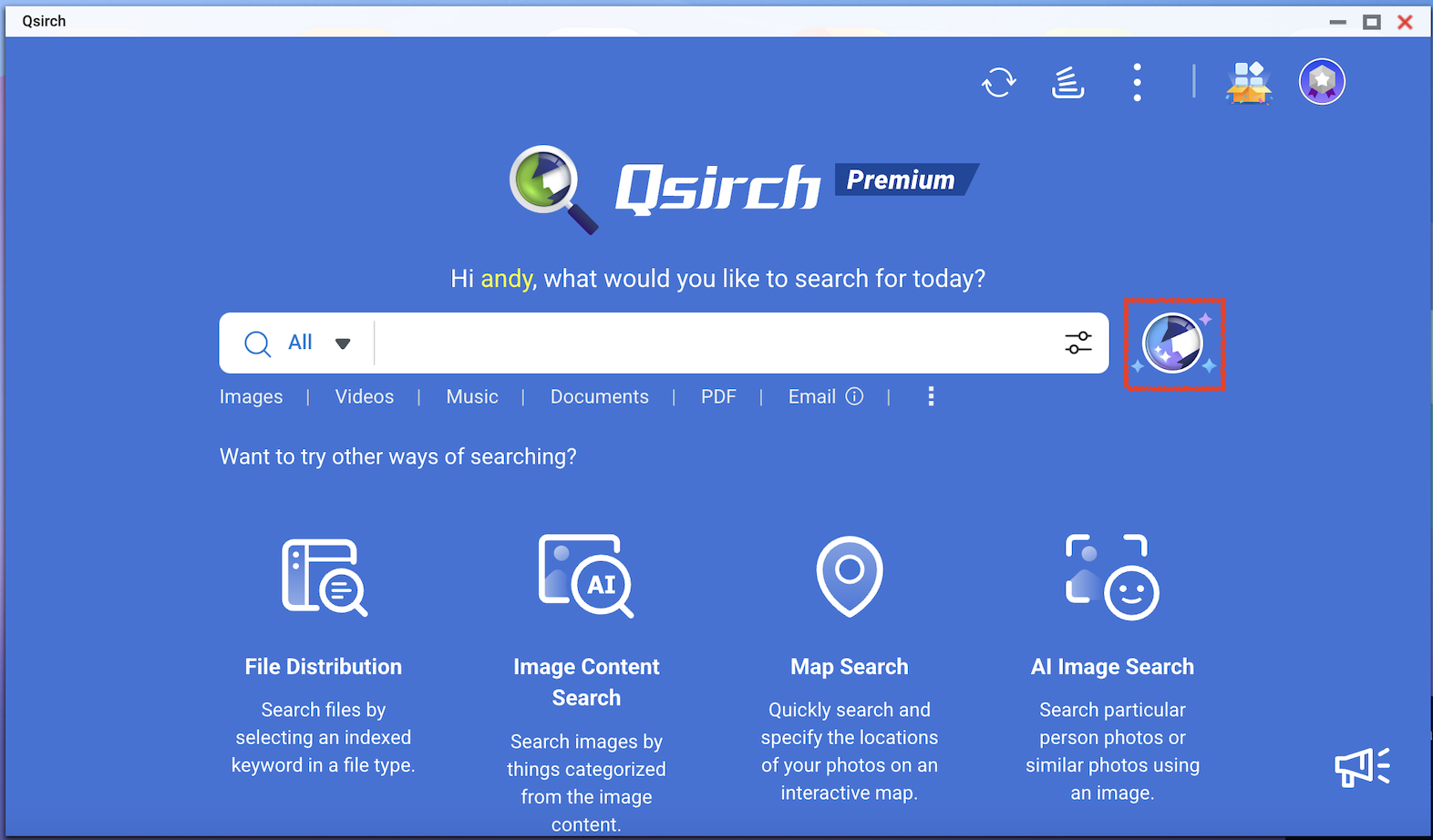

- Go to Qsirch home page and click the RAG search button.

Qsirch RAG search will only use the files included in "Source" for data retrieval.

Check the model you want to use and then start using RAG search.