How to use TensorFlow with Container Station

Last modified date:

2019-09-24

About TensorFlow

TensorFlow™ is an open source software library for numerical computation using data flow graphs. Nodes in the graph represent mathematical operations, while the graph edges represent the multidimensional data arrays (tensors) communicated between them.

Installing TensorFlow in Container Station

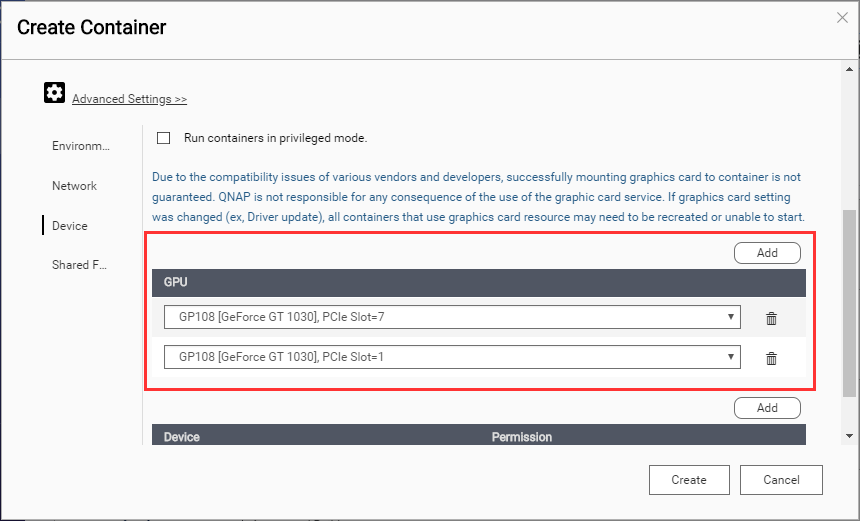

Mounting an NVIDIA GPU via SSH

Accessing the Container

You can now use Jupyter notebook with TensorFlow.