Best practices for testing 100GbE network performance on QNAP NAS?

Last modified date:

2025-07-04

Applicable Products

QNAP 100GbE Networking with QNAP NAS

Best Practice

Hardware Requirements

- Clients:

- PCIe Gen3 x16 or Gen4 x8 slot

- High-performance CPU with strong single-core frequency.

- Adequate system memory to handle high throughput.

- High-speed storage solution or RAM disk for accurate throughput testing.

- NAS:

- PCIe Gen3 x16 or Gen4 x8 slot for NIC

- Validate disk model performance

- Cards, Cable and the GBIC:

- 100GbE card and the GBIC are in the compatibility list for the NAS model.

- QSFP cables (4-lane combined) compatible with 100Gb networks.

Environment Setup

- Install Correct Network Drivers both PC and NAS:

- PC Side Configuration

- Set MTU to 9000.

- Disable Interrupt Moderation.(particularly on Windows).

- Check disk I/O capability.

Info

If your NAS is offline, download the ‘Advanced Network Driver’ package from QNAP’s official driver center and install it manually.

- NAS Side Configuration

- Set MTU to 9000.

- Use ifconfig to check TX/RX errors.

- Ensure SAMBA advanced setting > KSMBD and AIO are enabled

- Ensure that snapshots and encrypted volumes/shares are disabled.

- Without SED pool

- CPU Performance Monitoring

- During performance tests, closely monitor CPU utilization using tools such as top or htop.

- If any single CPU core exhibits sustained high usage (approaching 100%), it indicates a CPU bottleneck. This means the CPU is insufficiently powerful for 100Gb network testing.

Info

Upgrade to a higher-performance CPU with greater single-core frequency to continue accurate testing.

Networking test with iperf3

Warning

Single-threaded or single-process tests typically will not achieve maximum 100Gb network performance.

- Multi-process Test Methodology

- On NAS (server-side):

- Open multiple iperf3 server SSH consoles with each port:

- On NAS (server-side):

iperf3 -s -f M -p 50001 & iperf3 -s -f M -p 50002 & iperf3 -s -f M -p 50003 & iperf3 -s -f M -p 50004 &- After testing complete

killall iperf3- On Client Side:

- Conduct tests with multiple parallel processes to fully utilize the 100Gb bandwidth

- Linux example:

- Conduct tests with multiple parallel processes to fully utilize the 100Gb bandwidth

iperf3 -c <IP> -f M -i 1 -P 4 -t 60 -p 50001 & iperf3 -c <IP> -f M -i 1 -P 4 -t 60 -p 50002 & iperf3 -c <IP> -f M -i 1 -P 4 -t 60 -p 50003 & iperf3 -c <IP> -f M -i 1 -P 4 -t 60 -p 50004 &- Windows batch script:

@echo off

start "iperf3-50001" cmd /k "iperf3.exe -c <ip> -f M -i 1 -t 60 -P 1 -p 50001"

start "iperf3-50002" cmd /k "iperf3.exe -c <ip> -f M -i 1 -t 60 -P 1 -p 50002"

start "iperf3-50003" cmd /k "iperf3.exe -c <ip> -f M -i 1 -t 60 -P 1 -p 50003"

start "iperf3-50004" cmd /k "iperf3.exe -c <ip> -f M -i 1 -t 60 -P 1 -p 50004"

echo press any key to close

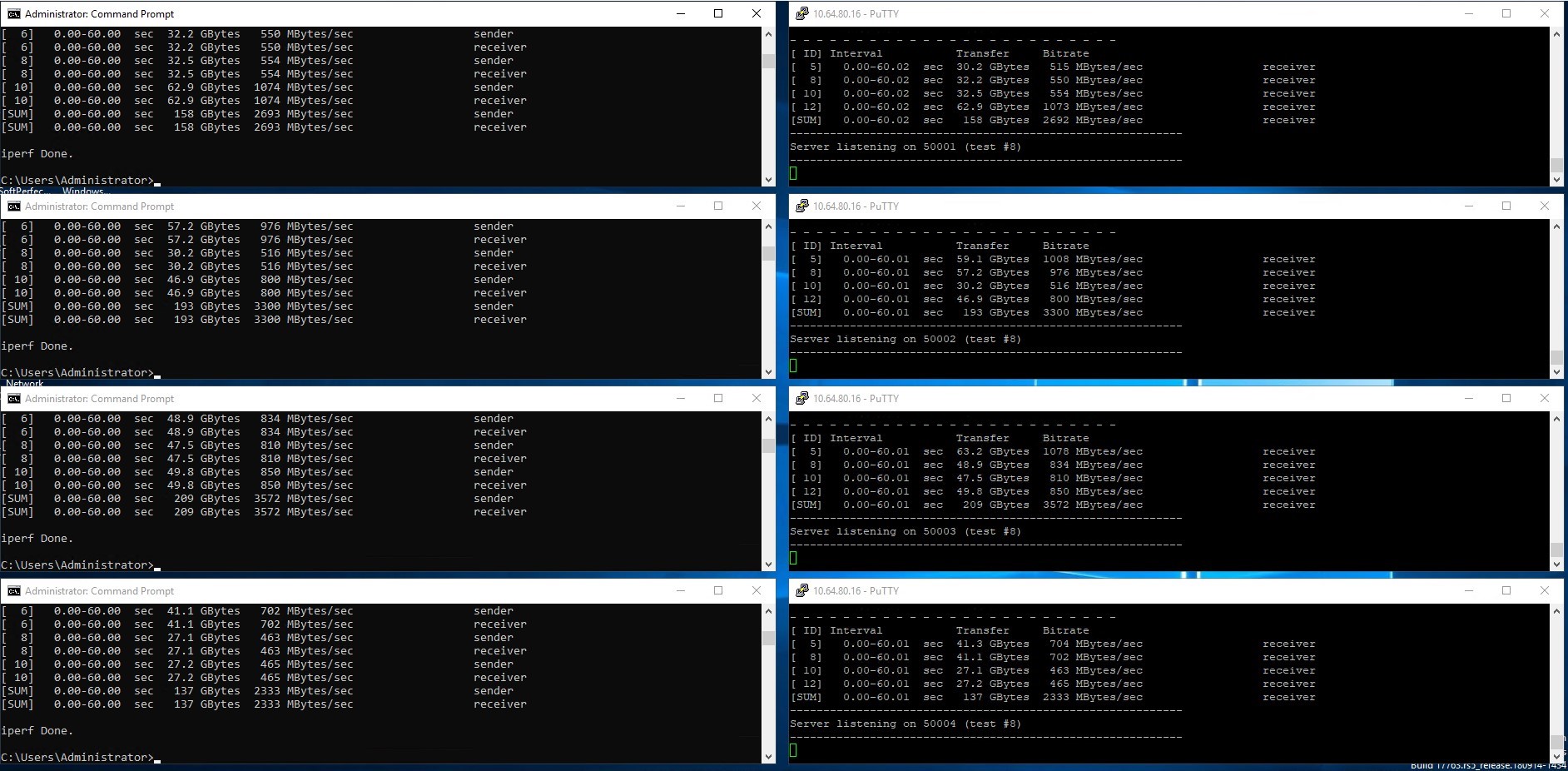

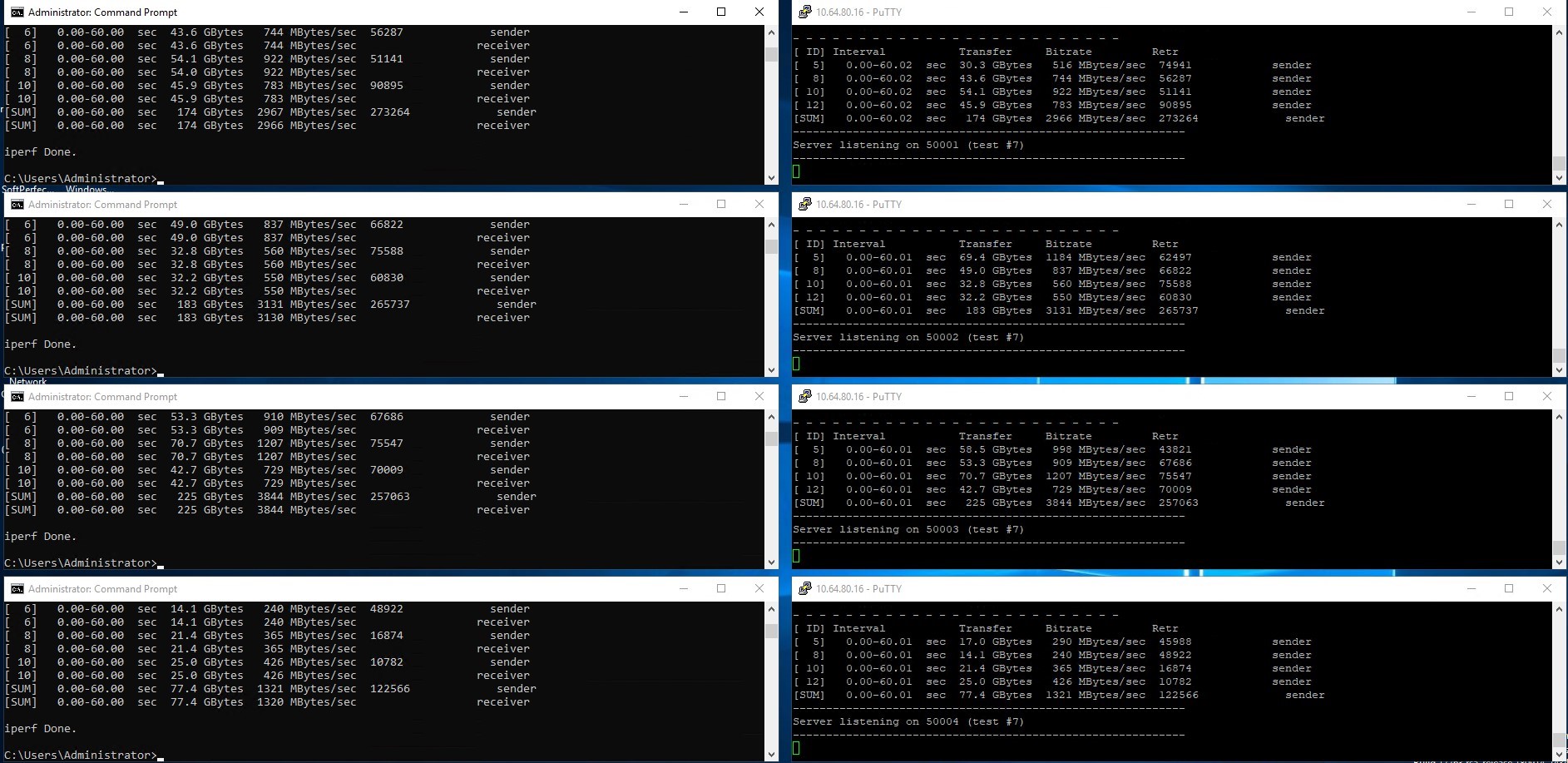

pause >nul- iperf3 example:

- Write speed=2692+3300+3572+2333=11897 MB/s

- Read speed = 2966+3130+3844+1320 = 11260 MB/s

File System Testing

- FIO for Linux:

fio --filename=Test1 --direct=1 --rw=write --bs=1M --ramp_time=10 --runtime=60 --name=test-write --ioengine=libaio --iodepth=32 --numjobs=32 --size=100G

fio --filename=Test2 --direct=1 --rw=read --bs=1M --ramp_time=10 --runtime=60 --name=test-read --ioengine=libaio --iodepth=32 --numjobs=32 --size=100GFIO for Windows

fio --filename=fio.dat --rw=write --bs=1M --ramp_time=10 --runtime=60 --name=test-write --ioengine=windowsaio --iodepth=32 --numjobs=32 --size=100G

fio --filename=fio.dat --rw=read --bs=1M --ramp_time=10 --runtime=60 --name=test-read --ioengine=windowsaio --iodepth=32 --numjobs=32 --size=100G --fallocate=noneInfo

Windows File Explorer and Robocopy using single thread with limited performance.

- Windows File Explorer:

- File Browser = single-thread

- Write performance improves with multi-process copying.

- Reading limited by CPU core

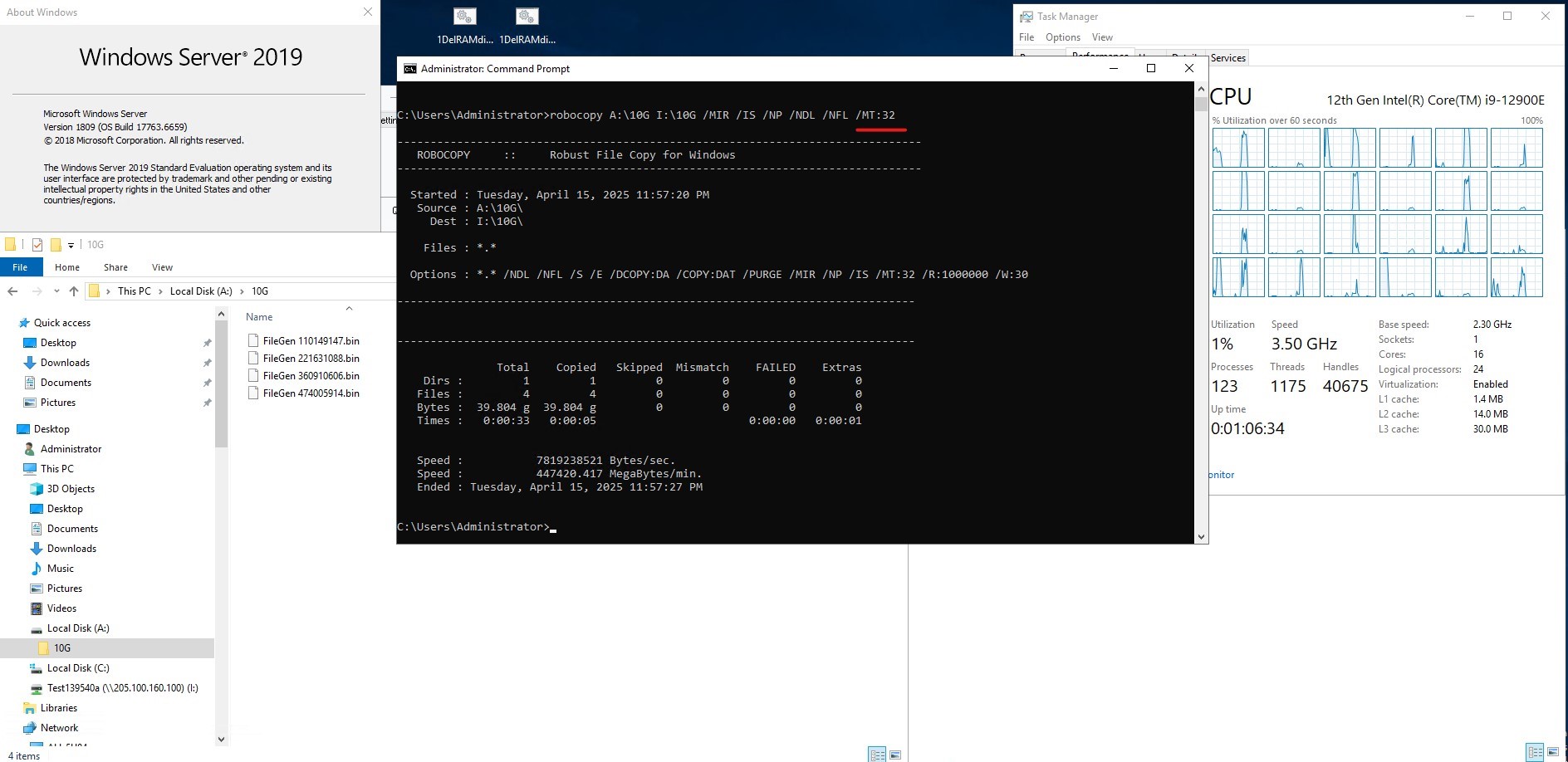

- Robocopy:

- Write Performance

- PowerShellPowerShell

robocopy A:\10G I:\10G /MIR /IS /NP /NDL /NFL /MT:32  Write Performance = 7819298321 Bytes/sec = 7453 MB/s

Write Performance = 7819298321 Bytes/sec = 7453 MB/s- Robocopy has limitation in read performance.

Checklist by layers:

- Hardware connection and Interface checking.

- Checked Hardware supported 100Gb

- Checked 100Gb Adapter, Fibre module/DAC cable are supported by compatibility list.

- Confirmed connection rate and Duplex mode

- System resource and interrupt (Linux client)

- Checked CPU core loading and interrupt

#check every cpu core usage top htop # Check CPU cores handling ethX interrupts. cat /proc/interrupts |grep ethX # Debug IRQ distribution across CPU cores. irqbalance –debug - Ethernet adapter to set cpu core affinity (RPS / XPS)ShellShell

#list the cpu core lscpu | grep '^CPU(s):' #Set which core to handle the threads echo <core mask> > /sys/class/net/ethX/queues/rx-0/rps_cpus - Check Ethernet Adapter driver & Firmware version ShellShell

ethtool –i ethX

- Checked CPU core loading and interrupt

- Network stack and performance parameters (Linux client)

- Managed Switch must be set up to MTU 9000

- MTU setting Jumbo Frame to 9000hell

ffer limitifconfig ethX mtu 9000 up - Check TCP buffer limitShellShellShell

- Suggestion value

sysctl -w net.core.rmem_max=16777216 sysctl -w net.core.wmem_max=16777216

- Suggestion value

- Enabled TSO / GSO / SG

ethtool –K ethX tso on gso on sg on

- Alternative Actual Performance Test

- Single machine test

- External test

- Use RAM Disk avoid disk bottleneck

- Linux

mount -t tmpfs -o size=16G tmpfs /mnt/ramdisk - Windows: Search for ramdisk tool.

- Linux

- Make transfer test (SMB / iSCSI / NFS)

- Test source file store to RAM Disk

- Test with other tool to check, example: dd / robocopy / rsync / fio